Two years ago, the New York Times lifted the lid on the dangers of location tracking, but the tech industry shrugged. Now authorities are fighting back.

In a masterful 2018 exposé, Your Apps Know Where You Were Last Night, and They’re Not Keeping It Secret, four NY Times reporters traced individuals’ dermatology appointments, school routines, and even visits to Planned Parenthood, all interpreted from a database of cellphone locations. Reporters then re-identified members of the public from the supposedly anonymized data (with their consent) and relayed their discoveries, to considerable alarm.

“It’s very scary. It feels like someone is following me, personally… I went through all [my colleagues’] phones and just told them: ‘You have to turn this off. You have to delete this.’ Nobody knew.” —Elise Lee.

The tech industry was surprised too; however, their surprise stemmed from the public’s feigned ignorance — not from the revelations. Brian Wong from ad firm Kiip summed up the consensus: ‘You would have to be pretty oblivious if you are not aware that this is going on.’ How could it be that the status quo according to techies is also unheard of and scary to people? What’s at the root of this dramatic mismatch, what’s perpetuating it, and why are we seeing new headlines about it today?

Based on our original research and others, here’s what we know about location data and how people perceive the collection and use of it.

UC Berkeley research shows users have long been confused about when and how location data is accessed. You might wonder how that’s possible since the two major mobile platforms, iOS and Android, now have indicators to show when location data is accessed. We know of at least two contributing factors: not everyone knows to look for the new location indicator (in fact, effective passive indicators are quite difficult to design), and even if passive location indicators were effective at notifying the user, researchers collected evidence as recently as 2015 that Android doesn’t reliably show the indicator in all cases.

There’s a big difference between allowing the collection of a single location data point, allowing a one-off trace to be collected, and persistent location tracking. And users have a hard time appreciating the difference. Advertisers and tech companies definitely understand the difference. Location has only modest value in isolation, but aggregated over time it yields powerful patterns and habits. Sample a user’s location for several weeks and you’ll learn where she lives, where she goes to work or school, and the common routes she takes. You’ll be able to identify a fleeting GPS blip as part of a user’s everyday routine, or as one of their deepest secrets. Historical location logs are certainly more valuable than one-off traces; as a result, they are also more powerful, and even dangerous.

Consent UIs and privacy notices often stress that data is anonymized and therefore safe. However, the details matter: what data is collected, is it combined with other data sources, and how is the data anonymized? By and large, it’s more accurate to say that the data is de-identified than it is to say it’s anonymized. Why does it matter and when should you care? Poorly implemented assurances of anonymity can often be crowbarred open with algorithms, persistence, and the right supplementary data. In 2006, two researchers combined Netflix and IMDb data to re-identify users on both services and deduce their political preferences. By cross-referencing US census data with electoral rolls, Harvard professor Latanya Sweeney was able to identify more than half of the US population using just gender, date of birth, and town of residence. These examples illustrate how re-identification of individuals in a dataset can be trivial if the de-identification techniques are weak. It’s both difficult to understand what makes for effective de-identification and to verify when a company has implemented it in their systems.

As the NY Times article points out, apps with obvious location needs – traffic, weather, maps, etc – frequently also use this location data for un- or under-disclosed purposes like ad targeting.

While these mismatches persist, authorities will naturally be on alert. The main players in the Western world – the EU, state regulators, and the FTC – have been actively monitoring company’s use of data for years, but a recent case shows that local authorities are watching too.

In 2019, LA City Attorney Mike Feuer sued TWC Product and Technology (TWC), operators of The Weather Channel mobile app, along with their parent company IBM. The suit alleged that TWC and IBM misused location-tracking technology to persistently monitor users and shared that information with third parties without adequate disclosure. The City Attorney argued this amounted to deceptive, misleading, and unfair practice that violated the Unfair Competition Law.

‘For years, TWC has deceptively used its Weather Channel app to amass its users’ private, personal geolocation data — tracking minute details about its users’ locations throughout the day and night, all while leading users to believe that their data will only be used to provide them with ‘personalized local weather data, alerts and forecasts.’ —text from initial lawsuit.

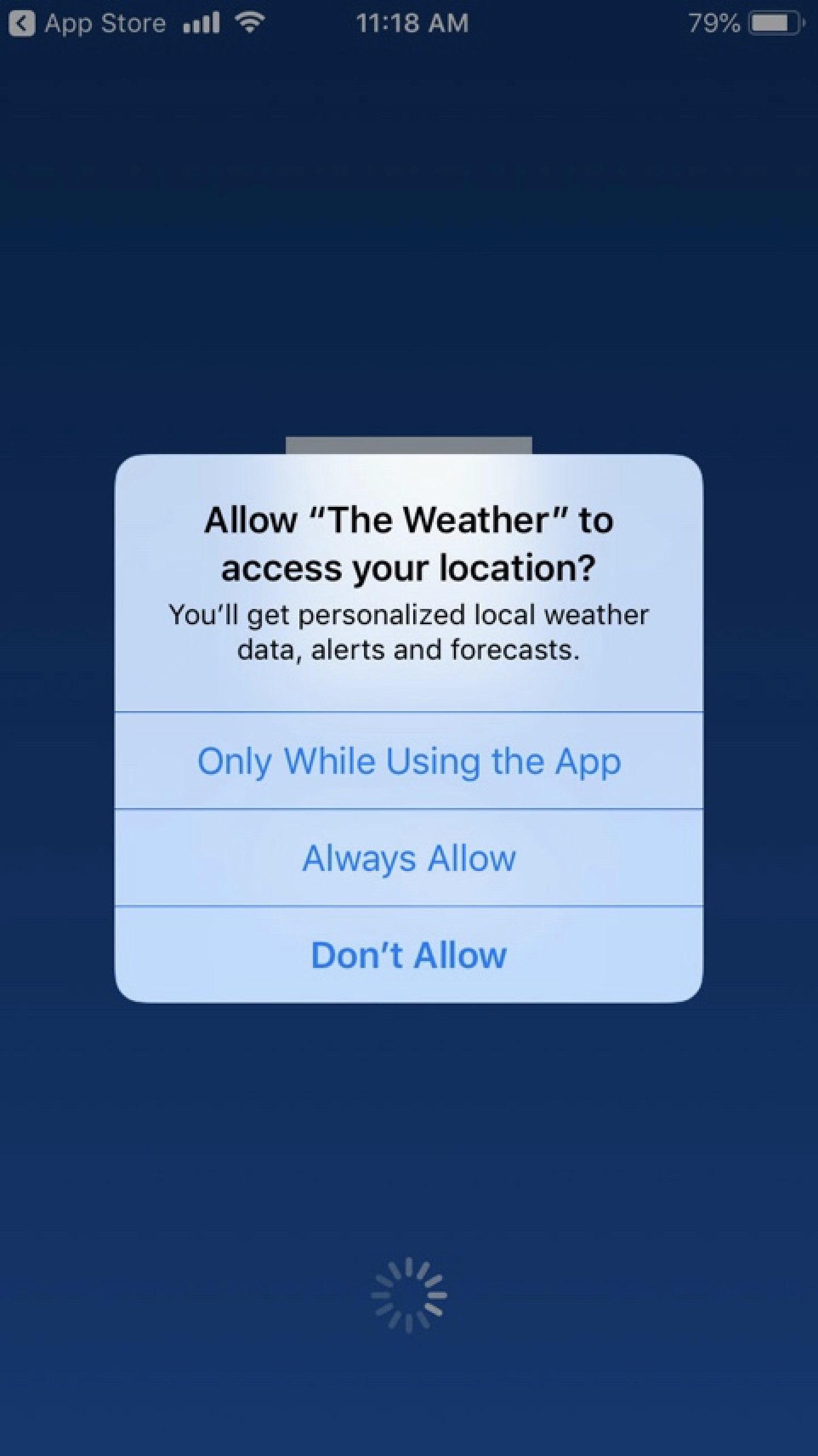

Below we have a picture of TWC’s app’s original iOS screen and disclosure:

The case alleged that TWC took advantage of the gap between what users thought was happening based on their mental model of mobile apps and permissions and what actually was happening: a typical feature of dark patterns.

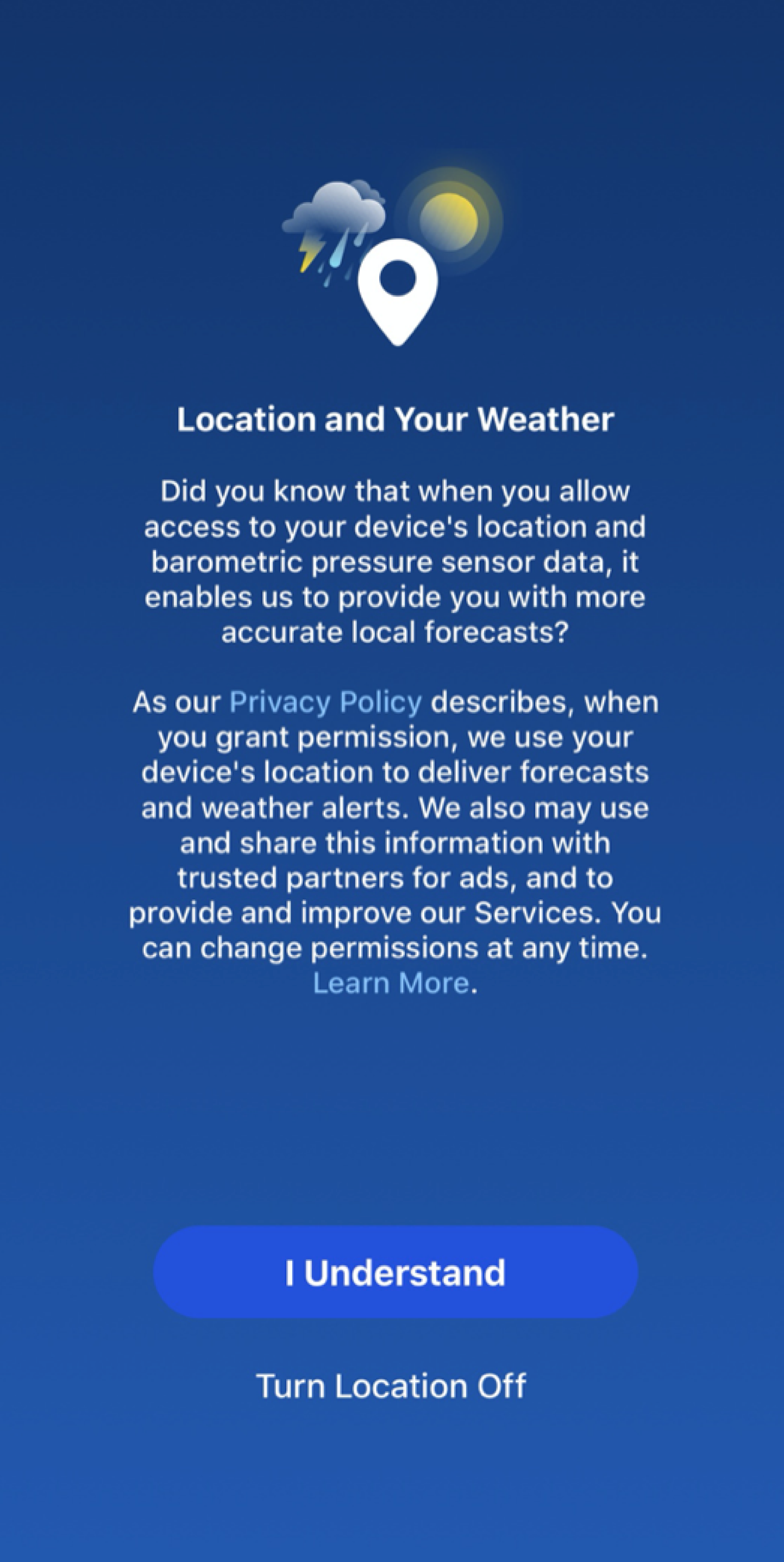

After the lawsuit was filed, the disclosure was updated to the following:

First let’s talk about this screen from the design – as opposed to the legal – perspective. Many of the design choices might bias users towards granting access:

- The use of when, not if, presumes consent before it is given.

- Data use cases are buried in the middle of a paragraph.

- The accept button has much stronger visual weight than the decline button; it even appears to already be selected.

- The decline button isn’t styled like a button.

- The label ‘Turn Location Off’ suggests declining automatic access will render the app inoperable.

The two parties recently agreed to a court-approved settlement. The settlement specifies that TWC must implement the following changes:

- Update the copy on both this disclosure screen and the linked Learn More screen, to make it clear that location is collected over time.

- Update the copy to make it clear that the company is recording profiles on users with the collected location data.

- Eliminate the priming language by changing the “when’s” to “ifs”.

- Update the copy to clarify that the app is still usable if location access is not granted; users can simply enter a location by hand.

Additionally, separate from the court approved settlement, IBM agreed to donate equipment and infrastructure to LA’s Covid-tracing efforts.

So, in what way is this anything more than a minor quibble over language? If the problem can be resolved with simple copy changes, was it really that big of a deal? Not so fast. We think tech teams should note this settlement with interest, and take heed of several lessons.

We all know users don’t read privacy policies. Authorities and regulators know that too. TWC argued all the relevant disclosure was provided in the privacy policy, meeting the requirements of the California Online Privacy Protect Act (CalOPPA). Not good enough, countered Feuer, arguing that this info, to the extent it was provided at all, was effectively buried in a 10,000-word policy, hampering transparency and informed consent. In some cases, information such as using location history to build user profiles was not disclosed at all.

One lesson from this case is that designers should strongly lean to communicating sensitive data use cases, along with their longer-term implications, at the moment of need. Tucking them into a privacy policy is no longer enough. In the words of Maneesha Mithal, a privacy official at the Federal Trade Commission: ‘You can’t cure a misleading just-in-time disclosure with information in a privacy policy.’ It’s best to provide all relevant information at the moment that permission to collect the data is requested, as advised in Apple’s Human Interface Guidelines. You can also request permission at launch, which is understood to be less effective than providing context in the moment, but may be necessary for certain scenarios.

Mobile OS prompts have become more specific and granular overtime. Today, both iOS and Android initially only allow location permission to be granted on a one-time basis or when the app is foregrounded. Only once a user accepts foreground access and continues using the app is the option to request background access unlocked.

This tightening of options is good news for consumers; it makes it harder for developers to unfairly exploit location information, and ensures use cases are transparent and relevant. But we mustn’t assume these OS controls solve privacy problems alone.

The initial TWC suit predates some more recent evolutions in OS location controls, but even now it’s clear that the multiple purposes for which TWC used location simply wouldn’t fit within the short ‘purpose string’ available in mobile permission dialogs.

Some older definitions and approaches to privacy don’t suit the new information habits emerging technologies have unleashed. One way to model what’s acceptable to users is to think of data use relative to contextual integrity. From this perspective, privacy is primarily about norms. In everyday life, people don’t see privacy in black and white; there are no expectations that cover all cases, no clear delineation between public and private. Instead, people mould their privacy expectations and behaviors according to circumstance.

In 2004, when she proposed Contextual Integrity, Nissenbaum argued privacy norms depend on the sender, the recipient, the subject, the types of information, and what she terms the principles of transmission: the justification for and purpose of the exchange, almost an implicit promise between the parties on how to appropriately treat this information. A romantic secret whispered into a friend’s ear implies certain norms; sending credit card details to an e-commerce site establishes quite different ones. Contextual integrity, then, is about handling personal information in ways that respect the appropriate norms. Respect the norms and you’re probably behaving well; contravene them and you may be violating privacy.

Digital designers should be nodding their heads here: this is well aligned with the familiar UX research and design process. To respect contextual integrity, we should first identify these privacy norms through research, looking particularly at the variables Nissenbaum suggests. Then we should design flows and interfaces that map well to these mental models and expectations we’ve uncovered.

It’s clear to us that the TWC lawsuit was fundamentally addressing an alleged violation of contextual integrity. The general public will naturally expect a weather app to use location data to provide a weather forecast; but by tracking location histories and sharing location data with others, TWC was in violation of contextual integrity. The lawsuit suggests that just because a user gave location permission (whether they had read a privacy notice or not), if the eventual use of that data violates contextual integrity, an app may be on slippery ground.

The last decade has seen a range of new privacy texts, from GDPR to the CCPA and Brazil’s new LGPD. But although every regulation eventually settles on an agreed wording, interpretation and enforcement are fluid for years thereafter. Even the most precise regulation is finally understood only through a prolonged dance of oversteps, challenges, and wing-clips of lawsuits, prosecutions, and test cases.

Even if you think your design meets the letter of the law, it may still attract a legal challenge. This is particularly true with emerging technologies: the new information habits they allow are often fertile grounds for regulators and law enforcement agencies looking to test the boundaries of new laws.

Although the TWC settlement isn’t a finding of guilt or violation, it will nevertheless influence future cases, acting as a warning to tech companies to not be tempted to violate contextual integrity and emerging privacy law. Authorities are starting to watch closely, and alongside high-profile sources like the FTC, the EU, or national information commissions, these authorities include local law enforcement, increasingly willing to take rapid, almost entrepreneurial action against perceived violations.

The risks involved aren’t just financial or regulatory. Sure, fines and compensations can be painful – just ask British Airways, landed with a £183 million GDPR fine – but as consumers and press learn more about privacy, reputational risks also become deep. These risks can even extend to hiring: tech workers are becoming increasingly mobilized against unethical practice, with reports that certain tech giants have seen candidate acceptance rates plummet as potential employees reject firms with toxic reputations.

Privacy issues therefore have far deeper implications than you might think. They affect product management and design, not just legal and engineering; they hurt customer and staff retention, not just the legal budget. Privacy is a contest that no one should want to lose.